Arc XP CDN and PageBuilder Caching Layers

This document explains how your pages are rendered, how Arc XP caches both the page and its content sources, and how this ensures scalability and resiliency for your sites.

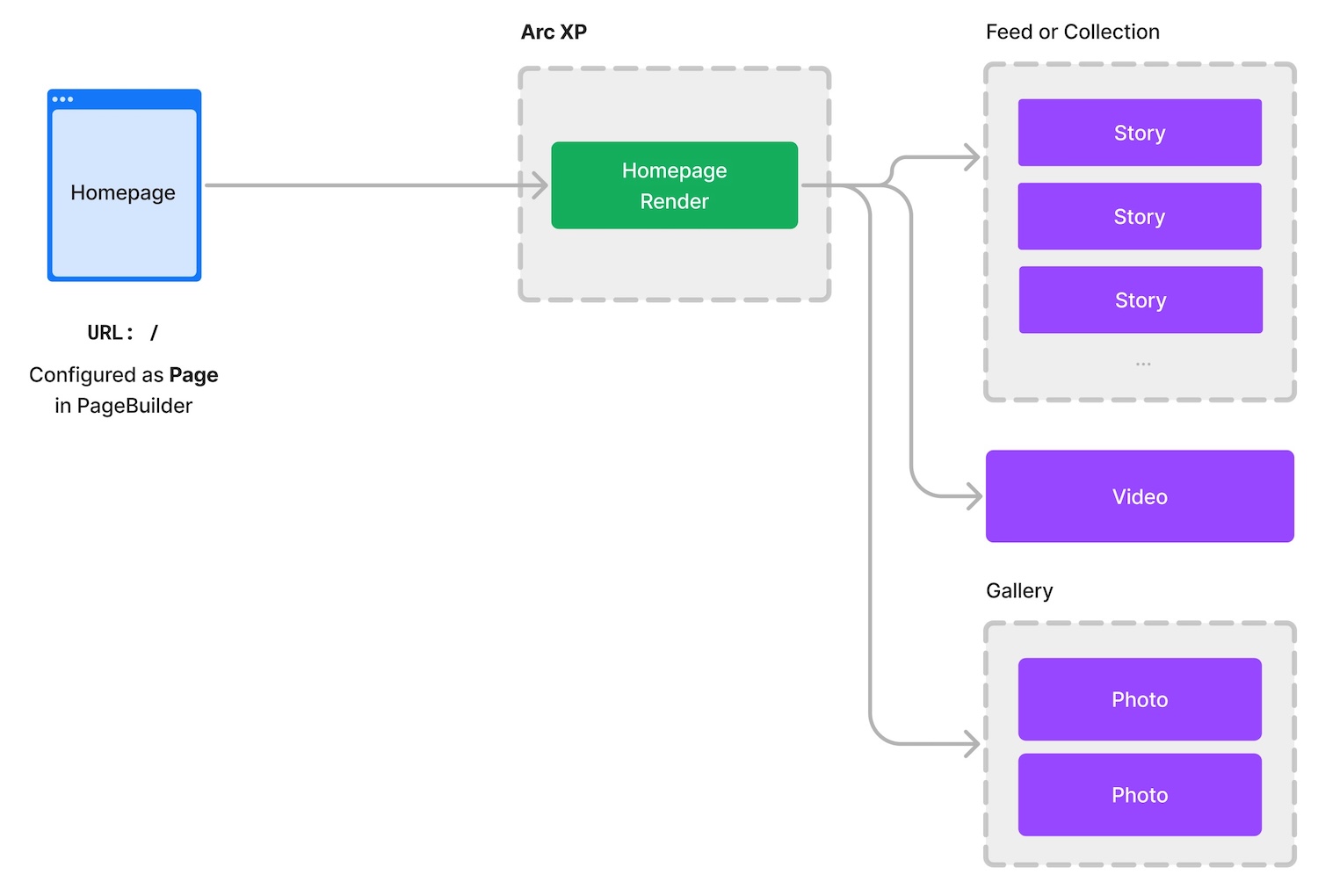

Let’s start with an example of the homepage.

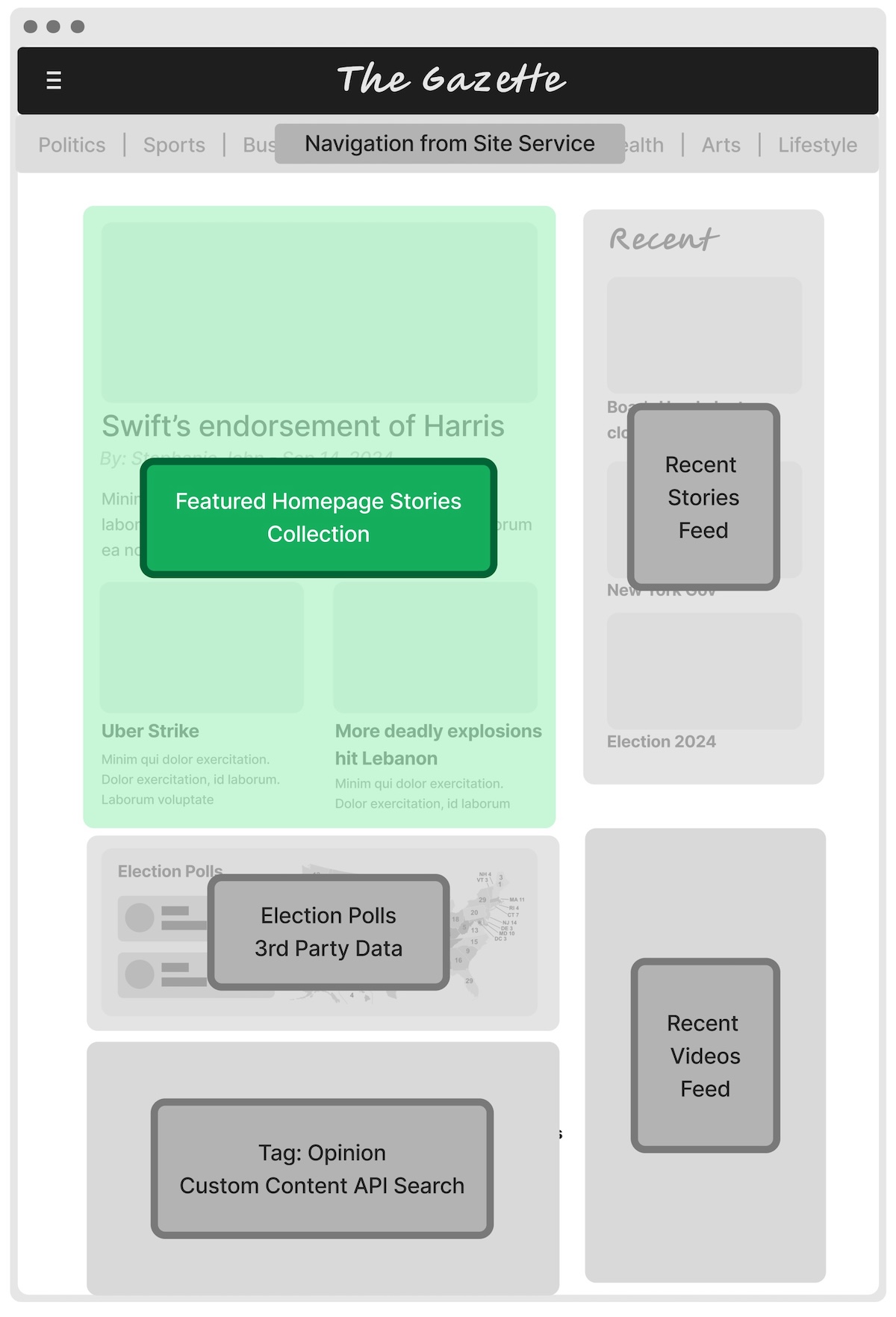

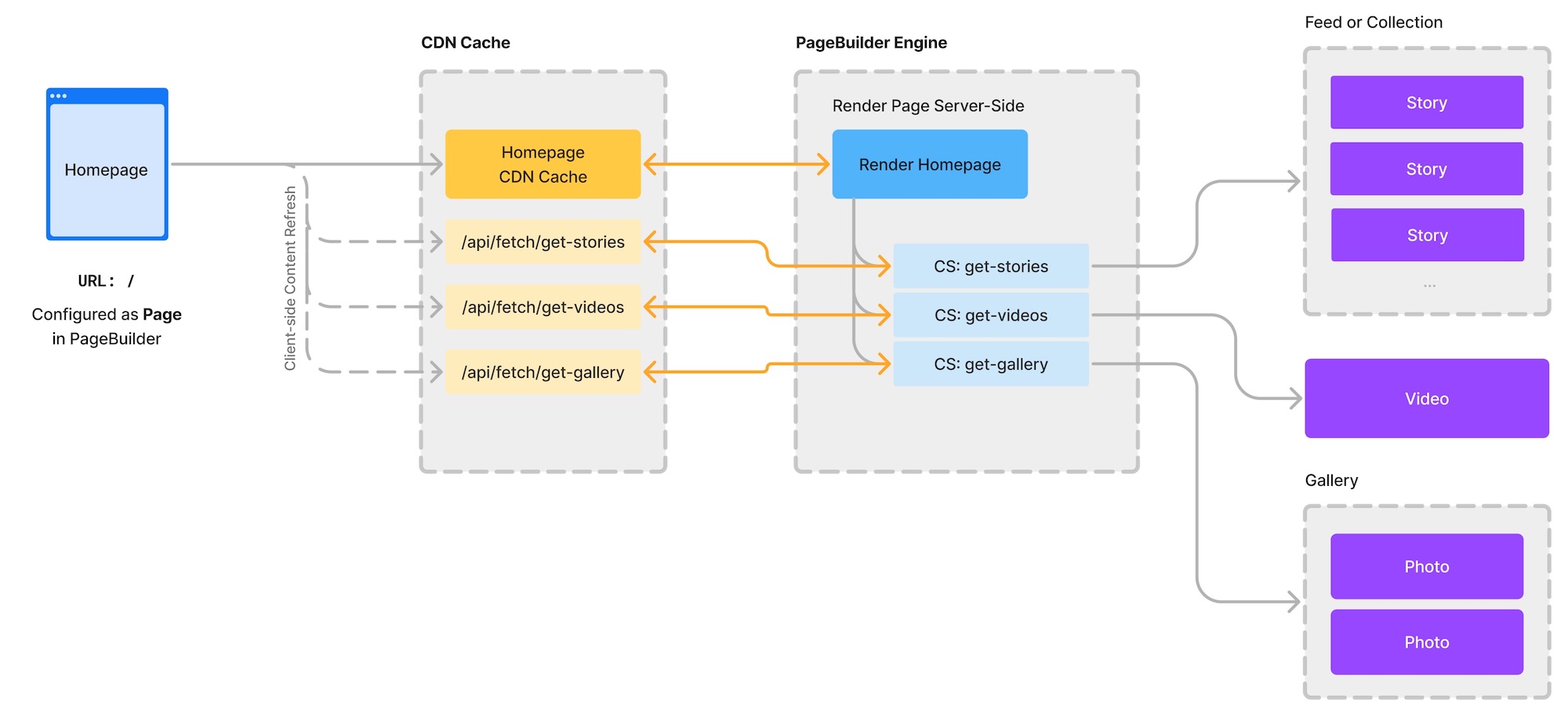

As discussed in Understanding how your page composition impacts content platform load, a page can include multiple content blocks and content sources that power the final page your readers see. The following diagram illustrates how your homepage might be rendered.

Arc XP CDN Layer

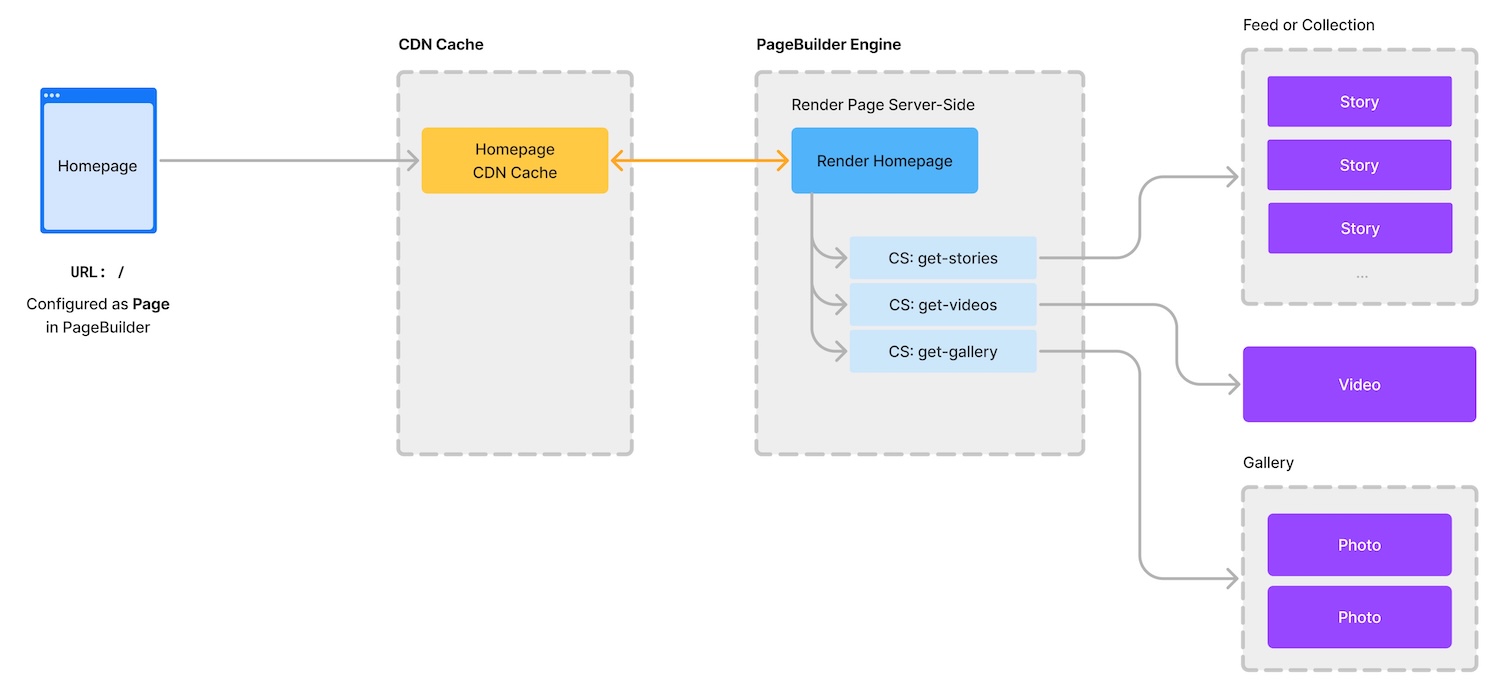

When a user requests a page, PageBuilder retrieves content from the Arc XP content platform APIs and/or external APIs to dynamically compile the page. After it’s rendered, the final HTML is cached at the Arc XP CDN layer, which is the first caching layer we use.

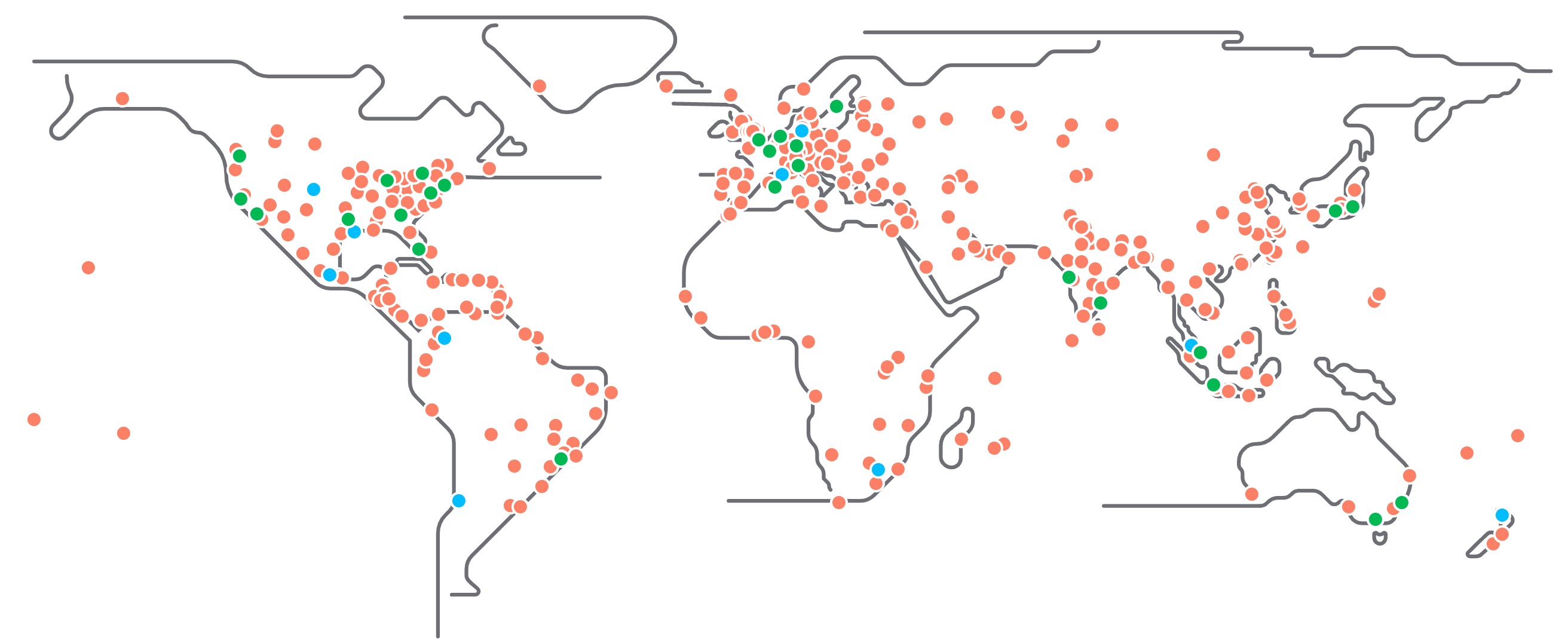

The CDN cache helps minimize the impact of supporting global regions, delivering content quickly worldwide—regardless of where your editorial team is based. For example, if you’re based in Europe and your Arc XP resources are configured in the EU region, the Arc XP CDN network ensures fast content delivery to readers worldwide.

The global edge network includes 4,200 nodes in 130+ countries:

For more information on how the Arc XP CDN network handles readers requests, see Arc XP's Content Delivery Network (CDN) Caching

PageBuilder Cache Layer

Now, let’s examine the second caching layer: PageBuilder cache. Let’s switch our example to article detail pages to explain how PageBuilder cache helps scale traffic and reduce unnecessary load on the content platform and third-party APIs.

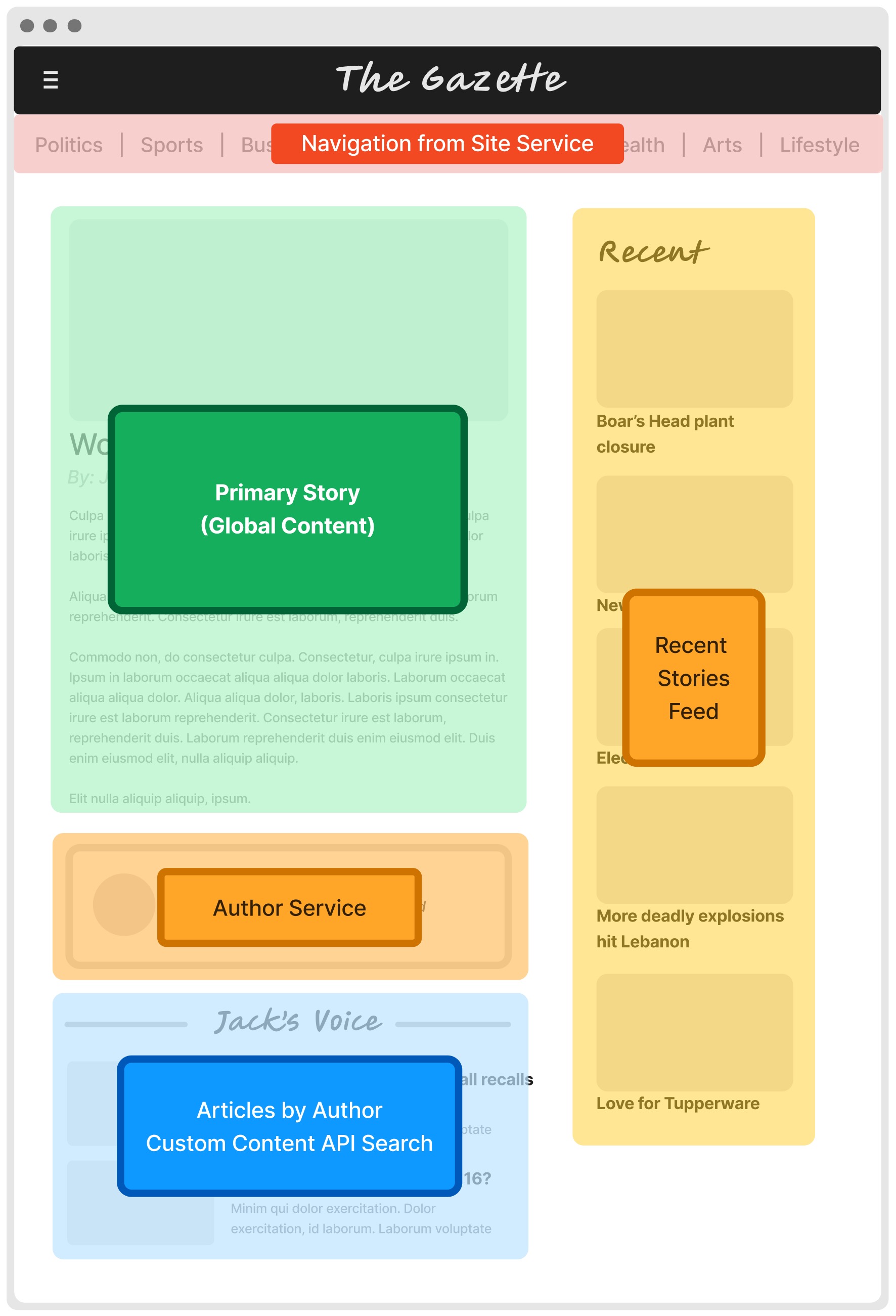

Imagine the layout of your article detail page appears as the following diagram. This template is used to render 1,000 sports article pages, and as readers visit these pages, the system needs to efficiently handle their requests.

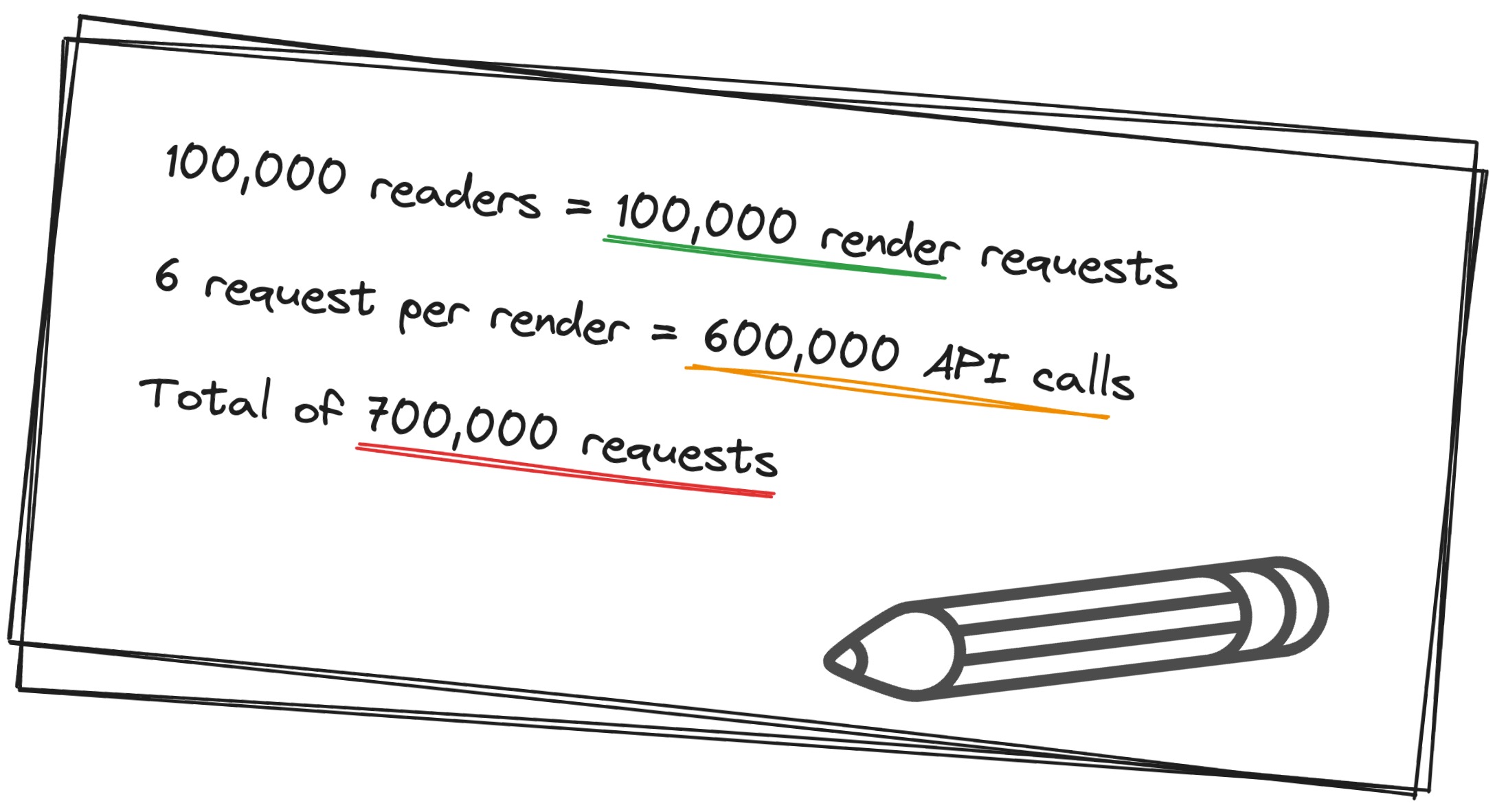

Let’s assume none of these pages are cached at the CDN, so every request requires a page render. For 100,000 readers accessing 1,000 different articles (with perfect distribution, meaning 100 readers per article), the scenario would be as follows:

As discussed in Understanding how your page composition impacts content platform load primary content (the unique article content) must be pulled from the content platform for each of the 1,000 articles. There’s no avoiding this.

However, secondary content, such as shared content blocks, is often the same or similar across multiple pages. For example:

- identical content - a block like the Recent Stories Feed may call the same Content API for all articles in the sports section, with only minor filtering differences.

- similar content - for the Author Bio block, you my have a limited number of authors, meaning many articles pull the same author bio data from the Author Service API.

Without caching, each page render would require multiple API calls, which can quickly overwhelm the system. Let’s break this down.

Without caching

Without caching, for each page render, we would need the following API calls:

- Site Service API for navigation

- Content API to fetch the primary article content

- Content API to fetch the Recent Stories feed (likely just sports article)

- Google Analytics API to query top-viewed articles for the Most Read block

- Author Service API to fetch the author bio

- Content API to fetch stories by the current author

This results in six API calls per page render. For 100,000 reader requests, that’s a total of 600,000 API calls.

Issues with this approach

The following issues exist with this approach:

-

Slow rendering - even if each API call takes just 100-200 milliseconds, the total render time would be over a second, not including network latency, which can lead to noticeable delays for users.

-

API rate limits - many APIs have rate limits to prevent overload. For example, Google Analytics API Google Analytics limits requests to 100 per second or 50,000 per day. And while Arc XP’s CDN and render stack can scale rapidly, the underlying APIs cannot handle these high volumes.

How Arc XP handles this scenario

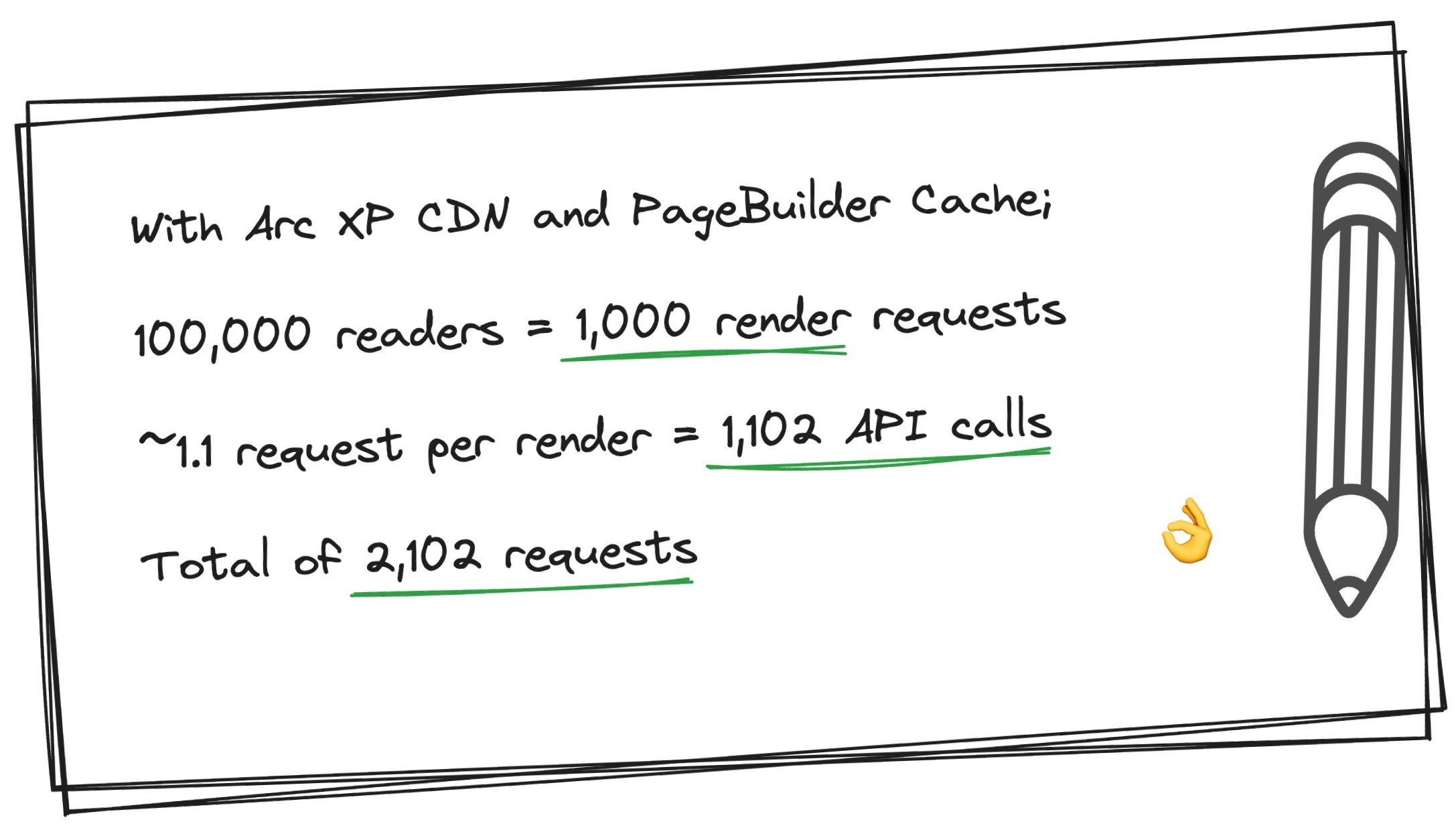

With the Arc XP CDN layer in place, the 100,00 requests are reduced to 1,000 page renders, but we still face a high volume of requests to the downstream APIs—6,000 requests in total.

This is where PageBuilder cache comes in. PageBuilder caches each content source by its name and query parameters (combination of these forms a “cache key” internally). If the same request is made again, the cached content is used, reducing the need for repeated API calls.

Let’s revisit our napkin math:

- The Most Read stories block is reduced from 1,000 requests to 1 Google Analytics API call, which can be cached longer with customizing the TTL.

- The Recent Stories block is reduced from 1,000 requests to 1 Content API call for the stories.

- For the Author Bio and Author Stories blocks, let’s assume there are 50 authors. Regardless of story distribution, the total request are:

- 1,000 requests to 50 authors for the bio.

- 1,000 requests to 50 authors for their stories.

With caching in place, only 1,102 API calls are needed to serve 100,000 readers requests.

The beauty of this cache model; within the same time frame, if we had 1 million, or 10 million, or 1 billion readers reading same 1,000 sports articles, our render load and downstream API load would be exactly same.

Client-side Content Refresh (Hydration) and /pf/api Calls

PageBuilder uses a unique content refresh approach that detects expired content and makes client-side API calls to retrieve updated content for a block.

For example, when a reader loads a homepage cached at the Arc XP CDN, PageBuilder client-side rendering logic (on browser) checks the freshness of the content, such as the featured stories block. Customers can configure this freshness threshold with configuring their content source TTLs. If the block’s content has expired (based on the TTL, which we’ll explain later), PageBuilder makes an API call to fetch fresh content from the back end. If the content has changed, the block is re-rendered with the updated content. This process happens quickly, often without the user noticing.

This functionality allows developers to control the update frequency of content resources. You can set some content sources for frequent updates, while others can be updated less often. Balance the update frequency helps optimize page rendering and load speed. Setting too many sources to update frequently can increase API calls, which may impact page performance. Conversely, setting everything to update slowly can cause delayed content updates, making new content appear slower than you want.

We recommend that developers balance fast and slow content updates for optimal performance.

In the previous diagram, client-side content refresh API calls follow the same chain: CDN → Render Stack → Content API.

It’s important to be aware of these API calls also get cached at the CDN layer.

Why we need two layers of cache

The primary reason for having two layers of cache is resiliency. Each cache layer at Arc XP protects your render stack and downstream APIs.

Let’s explore what the two cache layers means:

- Arc XP CDN layer

- Protection from DDoS attacks - the CDN layer shields your render stack from malicious actors who may attempt to overwhelm the system and disrupt access for legitimate users. See Protecting your content sources from bad actors, crawlers, and bots).

- Global content distribution - the CDN distributes content globally, ensuring cache is stored closer to your readers geographically for faster content delivery.

- Fallback mechanisms - in the event of a render failure, the CDN has sophisticated fallback mechanisms to continue serving traffic to end users.

- PageBuilder cache

- Protections for downstream APIs - PageBuilder cache safeguards your downstream APIs by caching content sources and controlling which content should be prioritized for freshness. This reduces unnecessary calls to APIs, minimizing the risk of overloading them.

- Improved performance - caching content with longer TTLs doesn’t necessarily result in stale content. It can also lower TTFB, which directly improves web vitals and SEO scores by reducing latency.

Together, these two cache layers enable Arc XP to provide a secure, resilient, and fast content delivery platform at scale.