In this blog post, we will explore how Arc XP scales to billions of requests safely and efficiently. Best way to explore this common scale topic, is through an example and doing some napkin math with easy to follow numbers. Let’s consider, we have 1,000 sports articles on our site and 100,000 readers are reading these articles.

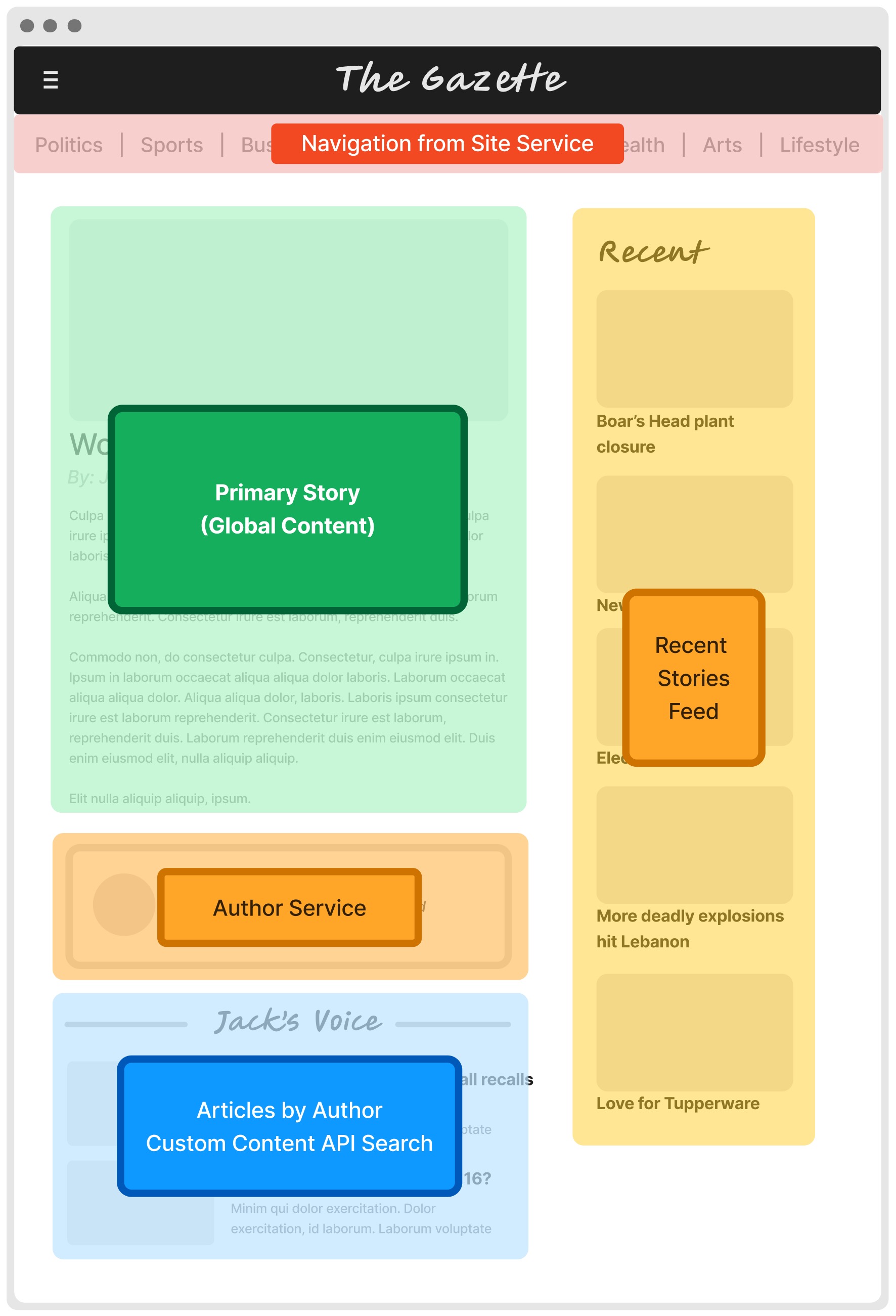

It’s important to understand what a “visit” mean to underlying systems first. Let’s start with visualizing a typical article page layout to understand and deconstruct it:

This page layout includes separate content pieces that needs to be pulled separately to render this page. The content for each block potentially be coming from different APIs. Perhaps even an external API like Google Analytics (i.e: to populate “Most popular articles” box). This example makes total of 6 api calls to compose this page. (See Understanding Page Composition guide for more).

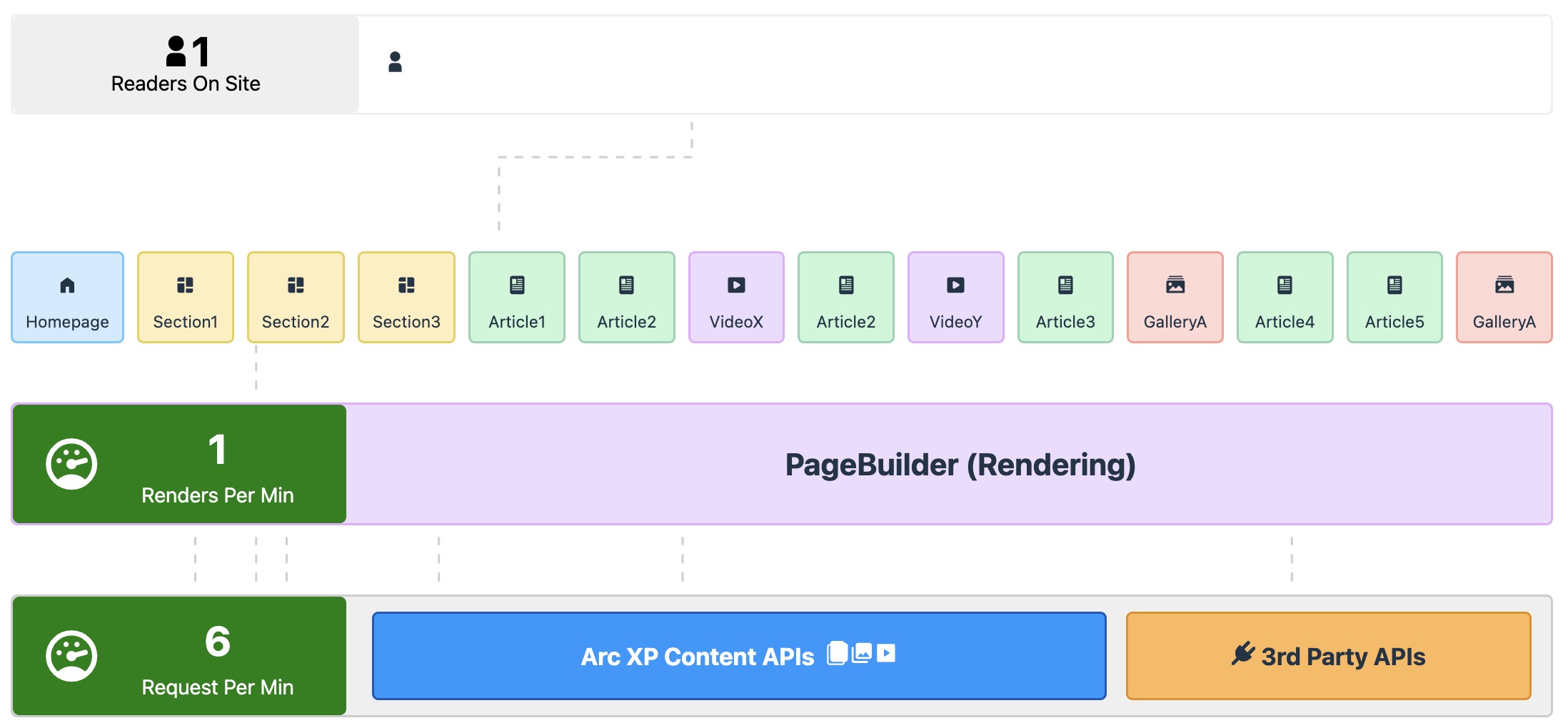

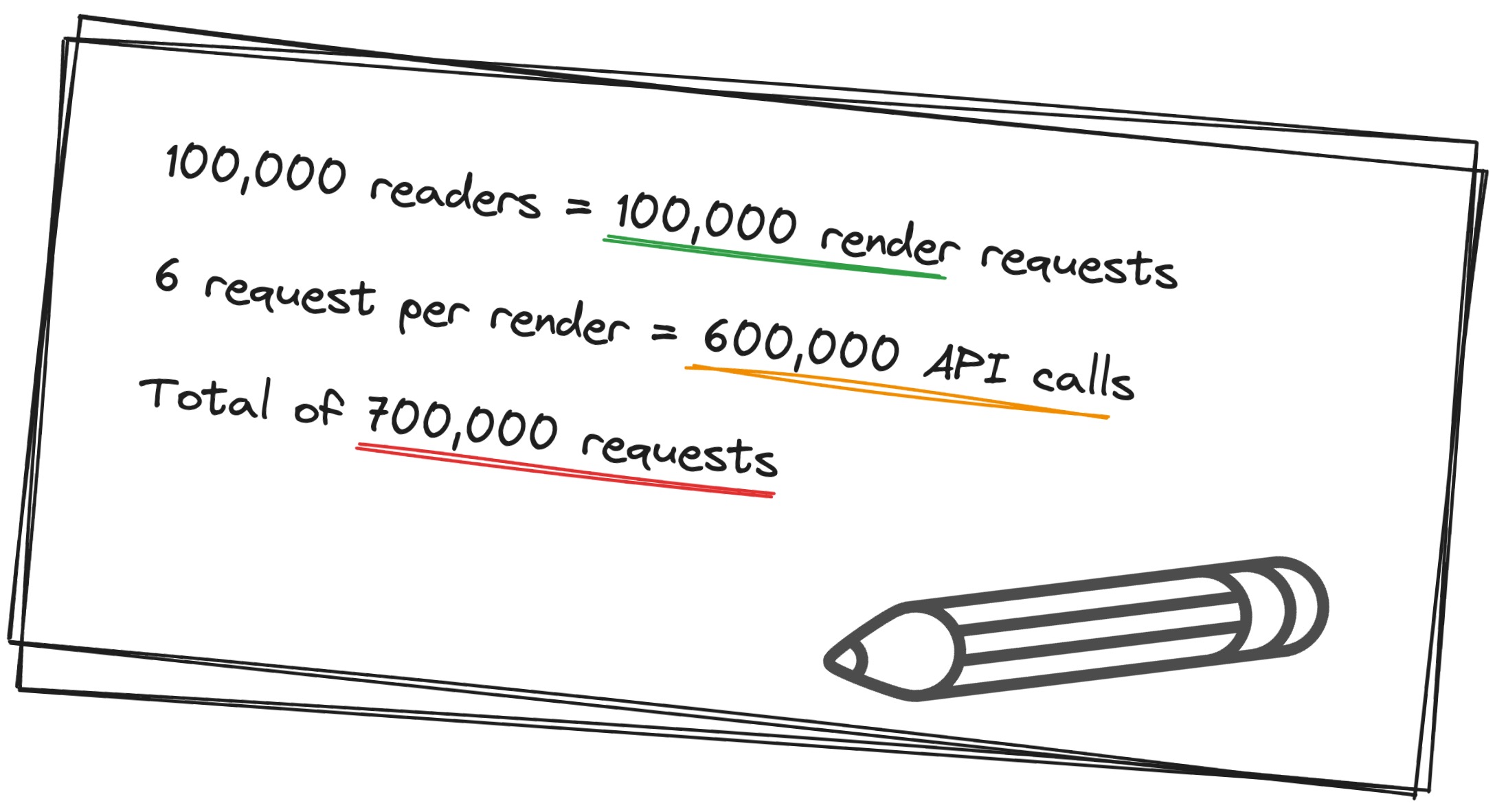

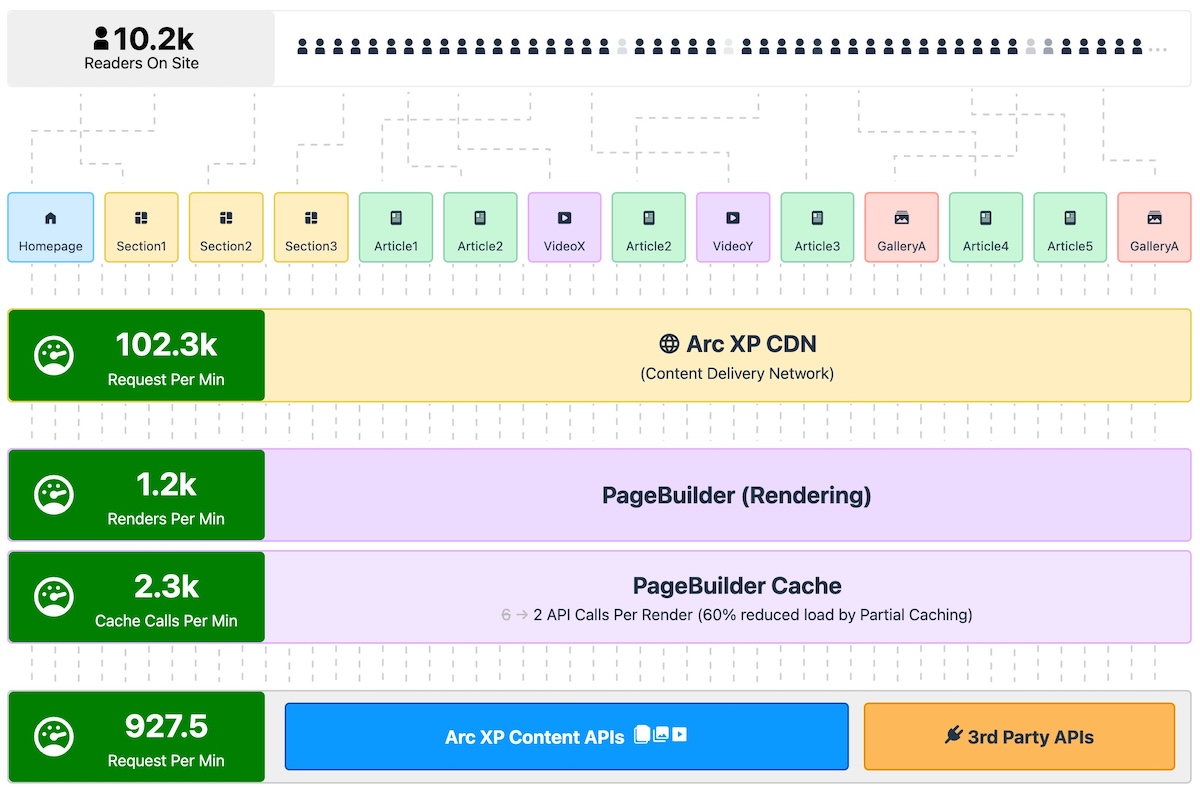

Let’s start with the assumption of none of these pages are cached anywhere. Every request from readers, requires a page render. For 100,000 readers accessing 1,000 different articles (with perfect distribution, meaning 100 readers per article), for each page render, we would need the following API calls:

- Site Service API for navigation

- Content API to fetch the primary article content

- Content API to fetch the Recent Stories feed (likely just sports article)

- Google Analytics API to query top-viewed articles for the Most Read block

- Author Service API to fetch the author bio

- Content API to fetch stories by the current author

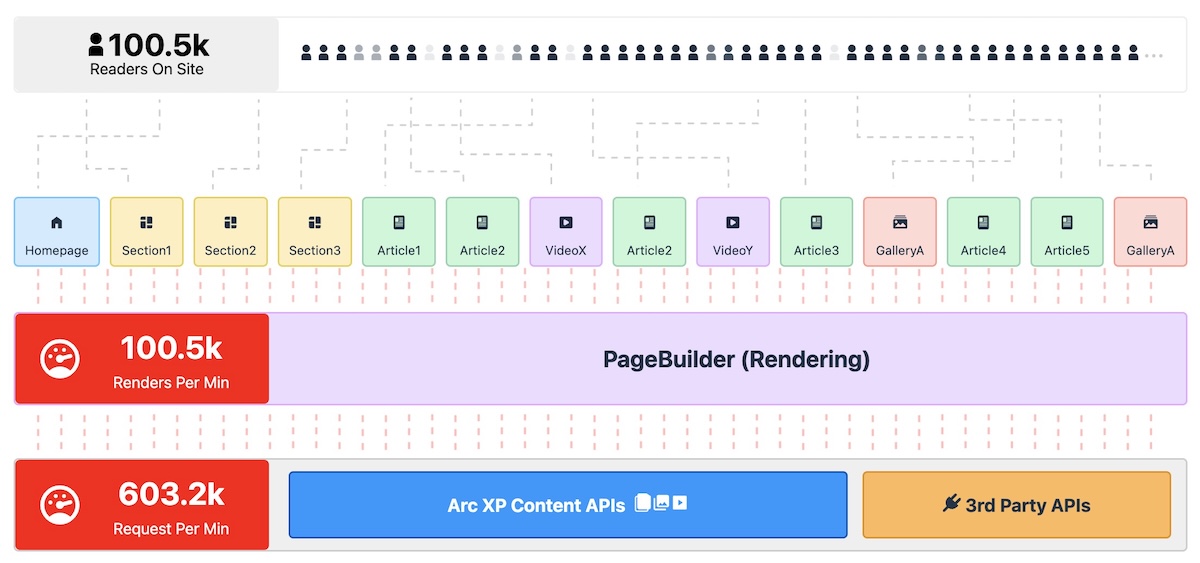

This results in six API calls per page render. Single users request would look like this in the Arc XP rendering stack (with no cache):

For 100,000 reader requests, that’s a total of 600,000 API calls.

Issues with this approach

The following issues exist with this approach:

-

Slow rendering - even if each API call takes just 100-200 milliseconds, the total render time would be over a second, not including network latency, which can lead to noticeable delays for users.

-

API rate limits - many APIs have rate limits to prevent overload. For example, Google Analytics API Google Analytics limits requests to 100 per second or 50,000 per day. And while Arc XP’s CDN and render stack can scale rapidly, the underlying APIs cannot handle these high volumes.

Without any layer to protect downstream systems, resulting in failure to render and serve pages to your readers.

How Arc XP handles this scenario

Arc XP has a robust, multi-layered caching and resiliency system designed to handle these scenarios effectively.

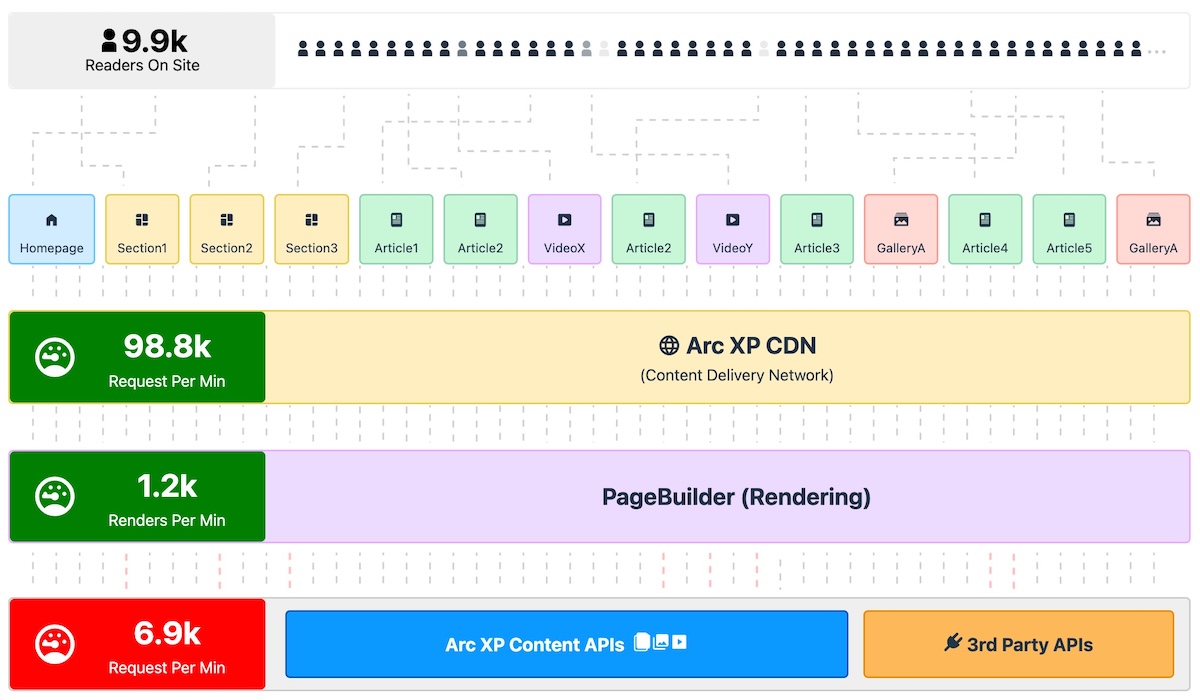

The first layer is the Arc XP CDN, which caches rendered pages and serves them to readers. It also distributes cache globally across 4,000+ edge network servers in over 130+ countries.

The second layer is the PageBuilder cache, which stores the content sources and blocks used during page rendering—giving developers fine-grained control over caching behavior. See Arc XP CDN and PageBuilder Caching to learn more about these resiliency layers.

Back to our example. With the Arc XP CDN layer in place, the 100,000 requests are reduced to 1,000 page renders, but we still face a high volume of requests to the downstream APIs—6,000 requests in total. It is still a significant load on the content APIs whether it’s Arc XP Content APIs or external.

This is where PageBuilder cache comes in. PageBuilder caches each content source by its name and query parameters (combination of these forms a “cache key” internally). If the same request is made again, the cached content is used, reducing the need for repeated API calls.

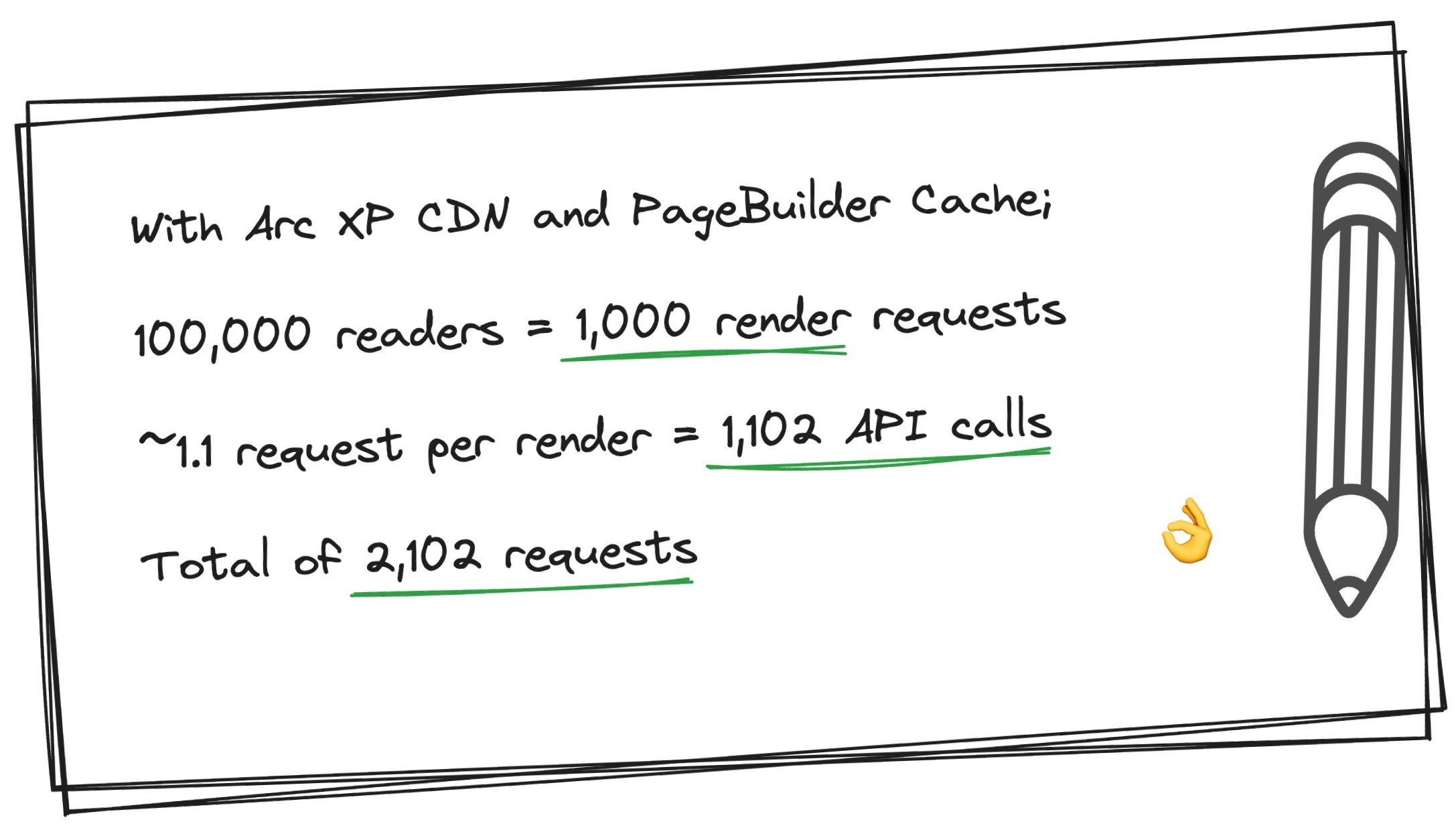

Let’s revisit our napkin math:

- The Most Read stories block is reduced from 1,000 requests to 1 Google Analytics API call, which can be cached longer with customizing the TTL.

- The Recent Stories block is reduced from 1,000 requests to 1 Content API call for the stories.

- For the Author Bio and Author Stories blocks, let’s assume there are 50 authors. Regardless of story distribution, the total request are:

- 1,000 requests to 50 authors for the bio.

- 1,000 requests to 50 authors for their stories.

With caching in place, only 1,102 API calls are needed to serve 100,000 readers requests.

You can even take this optimization further with customizing content source TTLs and utilizing partial caching. (See Partial Caching guide to learn more).

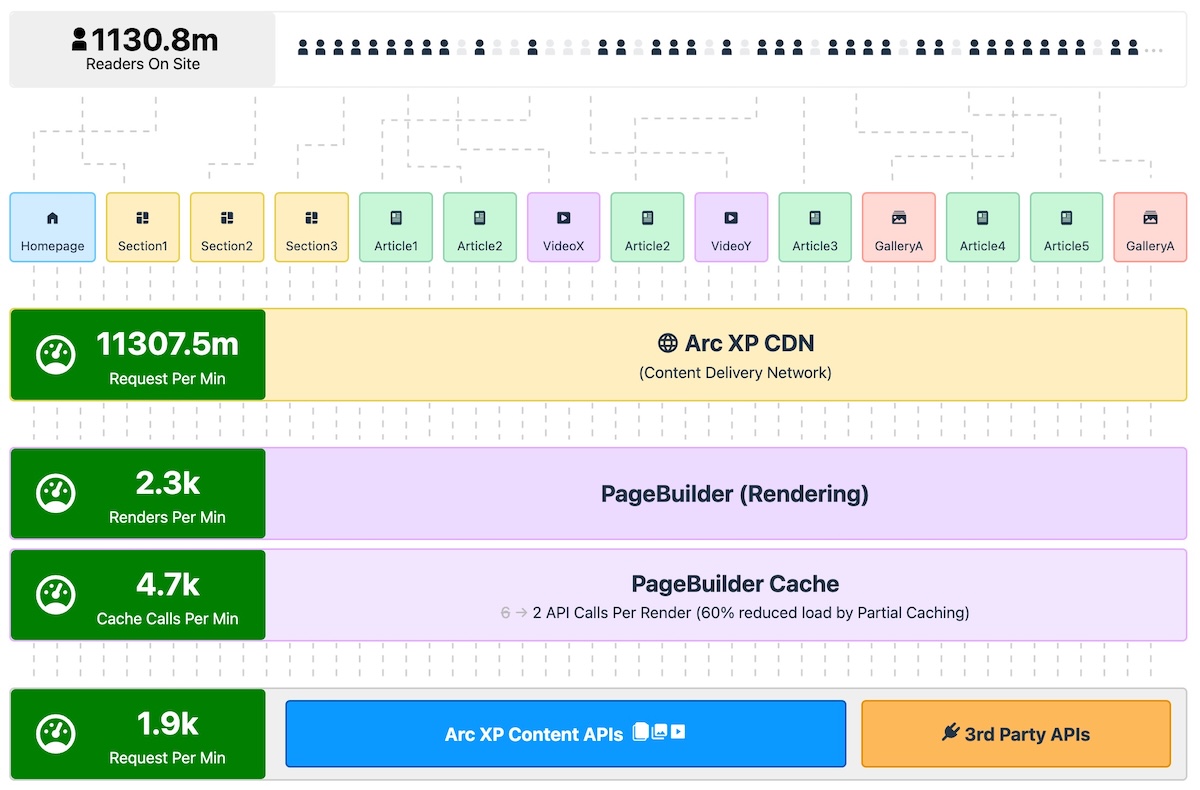

The beauty of this cache model; within the same time frame, if we had 1 million, or 10 million, or 1 billion readers reading same 1,000 sports articles, our render load and downstream API load would be exactly same.

Here is exact same simulation with 1 billion reader scenario (that’s 100,000x of our example):

We would love to hear from you about your scale challenges and how we can help you to scale your content delivery. Join the conversation in Arc XP Community Discussions.