While nobody but search engine implementors know precisely how video plays into SEO, it’s clear that video is an essential part of SEO optimization along with reader engagement. Video can also be great for accessibility, as the text is converted to speech as part of the generation process. For decades, news organizations have recognized that having a familiar face presenting the news is a key part of their brand. However, many news organizations don’t have an anchor. This is where IFX and the Video Generation service Hedra can help. This blog/tutorial/guide thingy will walk you through how to get started.

What is IFX?

IFX is an extensibility feature of Arc XP. We enable customers to write and ship their own custom code to run on our platform. This allows you to safely and securely extend the Arc XP platform to meet your publishing workflow needs.

What is Hedra?

Hedra is a video generation service that uses AI to generate video content. Their API accepts a prompt and a text, and generates a video of a news anchor reading the text. This is a great way to add video content to your site.

Requirements

- A Hedra account

- An IFX integration with Arc XP (Pssst! Your organization’s first integration is $free.99!)

- Working knowledge of IFX and story events on the Arc XP platform

Getting Started

Let’s summarize what we want to achieve:

- We want to generate a video of a news anchor reading the entire story

- We want to use the same news anchor for all stories

- We want the news anchor to have the same voice for all stories

- To keep costs in check, we only want to generate video for news stories we specify in Composer rather than all stories.

- We want to generate a video only once per story when the story is first published in Composer.

With all that in mind, let’s get our hands dirty.

Establish your Hedra Anchor Face and Voice

1. Decide on your prompt. Hedra will take whatever prompt you give it and guesstimate what your anchor would look like. So, the prompt is very important. If you are unfamiliar with AI prompting, a good primer can be found at Anthropic's site here.

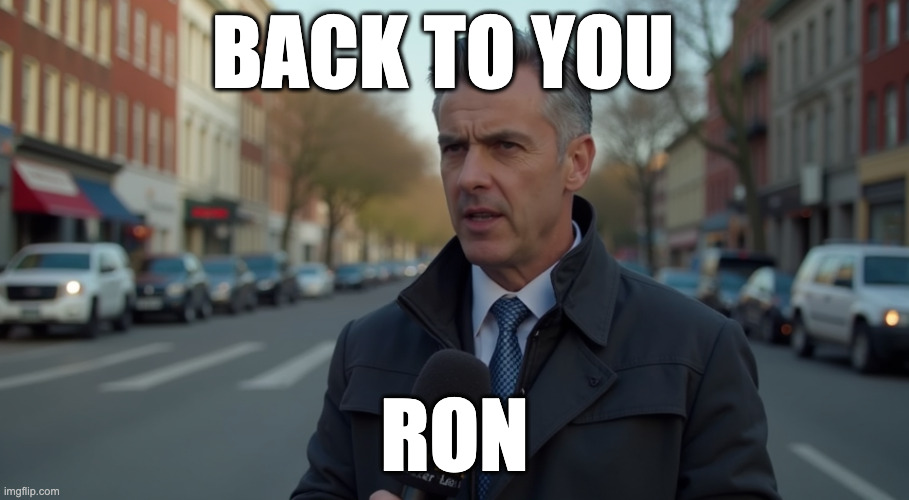

2. Using Hedra’s website (or API), generate a few videos or images (using the Generate tab) with different prompt text to find a face and voice that best fits your organization. If you already have an image or voice you want to use, you can specify those before creation. Hedra can generate images much faster and cheaper than video and you can use those generated images as your anchor. Here is my Anchor, his name is “Ronald T. Burgundy Esq.”:

3. I generated quite a few different images and videos with various prompts before I found the one that I liked. I suggest you do the same. Some of them will make you laugh! However, keep an eye on your budget. It’s very easy to blow your credits when you’re on a roll. Once you have the image you want, delete the other images in your library. We are going to have to pull the image ID from an API call, and it will be difficult to find the one you want from the API response if you have a ton of images in your library.

4. Generate your first test video! The UI helps you with the process by allowing you to select a starting image from your library. You can then specify the text of the video, the voice you want it to use (or generate a new voice!), and the prompt telling the AI how the video should look. You can also choose which AI model that will generate the video. I had the best results with the Flux Realism model, but this is another area where you may want to experiment to find what works best for you.

5. Video generation can take a while depending on site load, how much text there is, and if you are generating a new voice. Time to go grab some coffee.

6. Once the video is generated, watch it and decide if you like the voice being used and how the model looks. If not, GOTO step 2. If you like it, GOTO step 7.

7. We are done in the Hedra UI, it’s time to make some API calls.

Get the Image URL and Voice ID from Hedra’s API

We want to reuse the same image and voice for all stories, this will help establish a consistent look and feel for our stories.

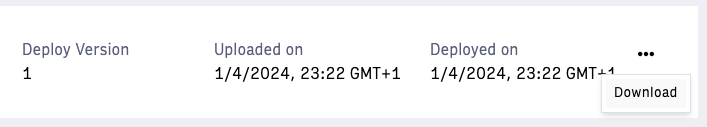

1. You will need to make a few API calls to Hedra to get the image and voice IDs. Fire up your favorite API tool, for this I used Insomnia. (Arc XP has no affiliation.. etc etc). Hedra offers a collection you can import into your API tool (click the download button).

2. Make an API call to /v1/projects to get a list of all generated video. From the response, locate the avatarImageURL of the anchor image you want to use, as well as the voiceID for the desired voice. Save both values in your .env file to use in your IFX integration.

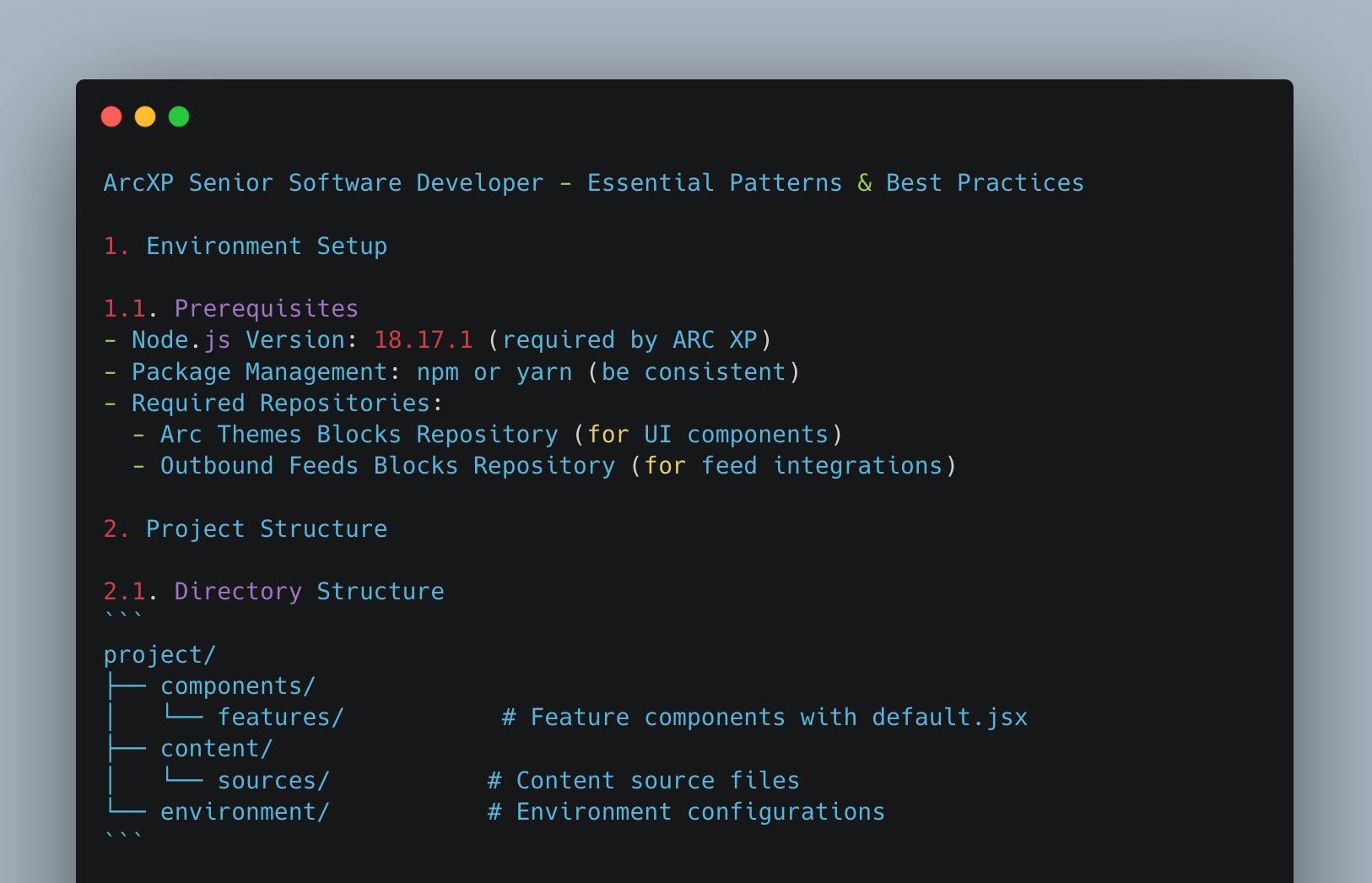

This is what your .env file should look like:

#This is your Hedra API keyX_API_KEY=YOUR KEYPROMPT=YOUR PROMPT#KEEP THIS THE SAME FOR ALL CALLS TO HEDRA IF YOU WANT CONSISTENCYSEED=SEED FROM HEDRA API CALL RESPONSE.#KEEP THIS THE SAME FOR ALL CALLS TO HEDRA IF YOU WANT CONSISTENCYAVATAR_IMAGE_URL=URL FROM HEDRA API CALL.#KEEP THIS THE SAME FOR ALL CALLS TO HEDRA IF YOU WANT CONSISTENCYVOICE_ID=VOICE ID FROM HEDRA API CALL.BASE_URL=https://api.sandbox.<YOUR ORG>.arcpublishing.com# USED BY ARC VIDEO CENTER SHOULD YOU CHOOSE TO WRITE THE VIDEO THERE# USING THE VIDEO CENTER APIVIDEO_CENTER_VERSION=0.8.0VIDEO_STREAM_TYPE=.mp4VIDEO_CATEGORY=YOUR STORY CATEGORYWrite the IFX Integration Code

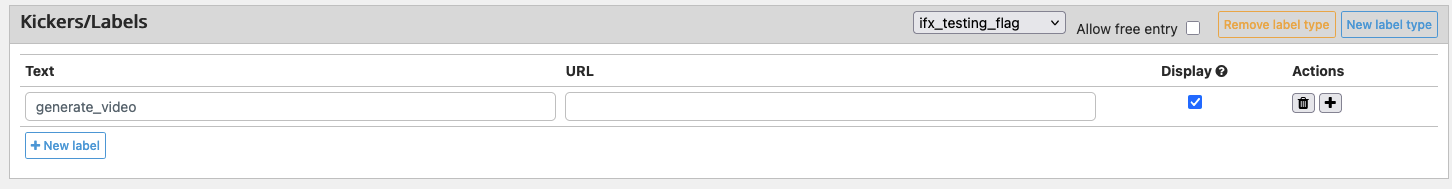

1. First, we need to create a kicker that will tell our integration to generate video for the story. In Composer Settings, create a label (A.K.A. Kicker) for your stories that you want to generate a video for. For this example, I will use the label “generate_video”.

2. Next we need to update our integration code. Download a copy of your IFX integration code. You can download your integration code from either the IFX API or the IFX Bundles Page in Arc Admin.

3. Open your integration code bundle in your favorite code editor.

4. Here is where the magic happens. We want to tell our IFX integration to run when:

a. We have specified in Composer that we want this story to generate video.

b. The integration sees that this is the first time the story has been published.

5. Create a new file in src/eventsHandlers called “hedraHandlerFirstPublish.js”. In that file, add the following code:

Also see how to use secrets within an integration.

const Hedra = require('hedra-node');const {DomHandler, DomUtils, parseDocument} = require("htmlparser2");const htmlparser2 = require("htmlparser2");const axios = require('axios');const DRAFT_URL_BASE = `${process.env["BASE_URL"]}/draft/v1/story/`;const config = { headers: {Authorization: `Bearer ${process.env["PERSONAL_ACCESS_TOKEN"]}`}};

async function callArcDraftAPI(storyId) { console.log("Calling DraftAPI"); // strongly recommend defensive coding here, keeping it simple for blog post. try { const response = await axios.get(`${DRAFT_URL_BASE}${storyId}/revision/draft`, config); console.log("DraftAPI response: " + JSON.stringify(response.data)); return response.data; } catch (err) { console.error("Error writing to draftAPI" + err); }}

// Here we grab all the story text from the content elements and concatenate them into a single story for Hedra to read.function concatenateTextContent(contentElements) { // Defensive coding goes here! return contentElements .filter(element => element.type === 'text') .map(element => { const dom = htmlparser2.parseDocument(element.content); console.log("textContent: " + DomUtils.textContent(dom)) return DomUtils.textContent(dom); }) .join(' ');}

async function hedraHandlerFirstPublish(eventNotification) { console.log(`Running Hedra Handler for story: ${eventNotification.body.id}, generate_video flag is set to ${eventNotification.body.label?.ifx_testing_flag?.text}`);

if(!eventNotification.body || !eventNotification.body.id) { console.error("No story ID found in event notification"); return; }

// We probably don't want to generate video for ALL published stories. We use the Arc labels/kicker to determine if we should generate a video if (Object.keys(eventNotification.body.label).length === 0 || eventNotification.body.label?.ifx_testing_flag.text !== "generate_video") { console.log("Story kicker label not set, not generating video"); return; }

const client = new Hedra({ // Add this to your IFX account secrets apiKey: process.env['X_API_KEY'], });

let avatarParams = { // Add these to your IFX account secrets prompt: process.env['PROMPT'], seed: process.env['SEED'] }

let concatenatedContent;

console.log("Extracting story text."); // Call Draft for the full story ANS. You may need to Mock this for local testing if you are using fake story data. let storyANS = await callArcDraftAPI(eventNotification.body.id); concatenatedContent = concatenateTextContent(storyANS.ans.content_elements);

let characterCreateParams = { aspectRatio: "1:1", audioSource: "tts", // Add this to your IFX account secrets avatarImage: process.env['AVATAR_IMAGE_URL'], text: concatenatedContent, voiceId: process.env['VOICE_ID'] } console.log("Creating story video with params:", characterCreateParams); let characterCreateResponse; try { characterCreateResponse = await client.characters.create(characterCreateParams); } catch (err) { console.error("Error creating character:", err); return {"status": "error Creating Hedra project"} } console.log('Character Create Response:', characterCreateResponse); console.log("Writing to DDB table");

return {"status": characterCreateResponse}}

module.exports = hedraHandlerFirstPublish;6. Update src/eventsHandlers.js to import and use the new handler:

const defaultHandler = require('./eventsHandlers/defaultHandler');const hedraHandlerFirstPublish = require('./eventsHandlers/hedraHandlerFirstPublish');

module.exports = { defaultHandler, hedraHandlerFirstPublish,}7. Update src/eventsRouter.json to assign the story:first-publish event to your handler:

{ "hedraHandlerFirstPublish": ["story:first-publish"]}8. Time to test! Note that you will need to simulate the story:first-publish event in order to test this. This event will need to contain a certain amount of ANS fields. We send a simulated event to our localhost server that is running when we run our integration, and that event should contain story ANS. That simulated ANS looks like:

{"key": "story:first-publish", "organizationId": "thisissparta", "typeId": 1, "version": 2, "time": null, "uuid": "", "invocationId": "12345-4db6-423b-915f-6b8f0c3a6487", "body": { "_id": "<ACTUAL IDS DONT MATTER HERE>", "additional_properties": { "clipboard": {}, "has_published_copy": false }, "address": {}, "canonical_website": "thisissparta", "comments": { "allow_comments": true }, "content_elements": [ { "_id": "<ACTUAL IDS DONT MATTER HERE>", "additional_properties": { "_id": 1730898698259, "comments": [], "inline_comments": [] }, "content": "Story text 1", "type": "text" }, { "_id": "<ACTUAL IDS DONT MATTER HERE>", "additional_properties": { "_id": 1730898698260, "comments": [], "inline_comments": [] }, "content": "Story Text 2", "type": "text" } ], "content_restrictions": { "content_code": "default" }, "created_date": "2024-11-05T23:23:21.501Z", "description": { "basic": "" }, "headlines": { "basic": "This is an AI generated story, it is very silly", "meta_title": "", "mobile": "", "native": "", "print": "", "tablet": "", "web": "" }, "label": { "ifx_testing_flag": { "display": true, "text": "generate_video", "url": "" } }, "language": "", "last_updated_date": "2024-11-06T18:55:32.506Z", "owner": { "id": "thisissparta", "sponsored": false } }, "subheadlines": { "basic": "AI story" }, "subtype": "Article",

"_website_ids": [ "thisissparta" ], "_website_urls": [], "canonical_url": "", "publishing": { "scheduled_operations": { "publish_edition": [], "unpublish_edition": [] } } }The Event Request itself will look like the below cURL command:

curl --location 'http://127.0.0.1:8080/ifx/local/invoke' \--header 'Content-Type: application/json' \--data-raw '{ "key": "story:first-publish", "organizationId": "thisissparta", "body": { <YOUR STORY ANS HERE > }, "typeId": 1, "version": 2, "time": null, "uuid": "", "invocationId": "7319faff-4db6-423b-915f-6b8f0c3a6487"}'9. After starting your integration, send the above event to your locally running integration server for testing. Note that this will generate a video every time you run this test.

10. Again, remember that video generation can take a while, and IFX integrations will timeout after 60 seconds. Your IFX integration will likely time out before the video is generated, this is why we still have the manual step of adding the video to video center rather than automating it.

11. Once the video is complete, download it to your local machine so it can be uploaded to Video Center.

Adding the Video to Video Center

We have a video! Now what? This is where the fun begins. We need to add the video to Arc Video Center so that it can be used in Composer and on your story page.

1. In Arc Admin, click on the “Video Center” tile.

2. In Video Center, click “Create Video” and select the video type and the Primary Website (if applicable), then click “Create”.

3. You will be redirected to a shell for your new video. Click “Upload New Version” and select the video you just downloaded from Hedra.

4. While it is uploading, add the required fields in the “Video Details” section.

5. Once you’ve added all needed details and the upload is complete. Click “Save” to save the video.

6. And we are done! The video can be added to your specific story, added to video galleries, or anywhere else in your Arc environment.

Next Steps

In a perfect world, this whole thing would be automated and we wouldn’t need to manually upload each video to video center. At the time of this writing, Hedra’s API does not support webhook callbacks or notifications when the video has completed generation. Once that is available, we could create an IFX webhook that would receive the “video complete” callback and handle uploading the video to Video Center for us.

Right now, we could use an IFX Scheduled Event to check Hedra’s API every few minutes for any videos with the status of “Complete”. We would need to also check to make sure we don’t upload duplicate videos, and that kind of complexity goes beyond the scope of a blog post. But, it IS possible to do this with IFX and Hedra’s API with some time investment.

Aaaaand we are done!

And here we are, we have a virtual news anchor that can read stories to our customers. This improves accessibility for our visually challenged customers and ostensibly improves our SEO. We can post these to social media or use them in our newsletters.